While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computers & Math

The Eye-Brain Connection: How Our Thoughts Shape What We See

A new study by biomedical engineers and neuroscientists shows that the brain’s visual regions play an active role in making sense of information.

Computer Modeling

Unveiling the Hidden Power of Quantum Computers: Scientists Discover Forgotten Particle that Could Unlock Universal Computation

Scientists may have uncovered the missing piece of quantum computing by reviving a particle once dismissed as useless. This particle, called the neglecton, could give fragile quantum systems the full power they need by working alongside Ising anyons. What was once considered mathematical waste may now hold the key to building universal quantum computers, turning discarded theory into a pathway toward the future of technology.

Computer Graphics

Cracking the Code: Scientists Breakthrough in Quantum Computing with a Single Atom

A research team has created a quantum logic gate that uses fewer qubits by encoding them with the powerful GKP error-correction code. By entangling quantum vibrations inside a single atom, they achieved a milestone that could transform how quantum computers scale.

Civil Engineering

A Groundbreaking Magnetic Trick for Quantum Computing: Stabilizing Qubits with Exotic Materials

Researchers have unveiled a new quantum material that could make quantum computers much more stable by using magnetism to protect delicate qubits from environmental disturbances. Unlike traditional approaches that rely on rare spin-orbit interactions, this method uses magnetic interactions—common in many materials—to create robust topological excitations. Combined with a new computational tool for finding such materials, this breakthrough could pave the way for practical, disturbance-resistant quantum computers.

-

Detectors10 months ago

Detectors10 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate11 months ago

Earth & Climate11 months agoRetiring Abroad Can Be Lonely Business

-

Cancer11 months ago

Cancer11 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Albert Einstein12 months ago

Albert Einstein12 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Chemistry11 months ago

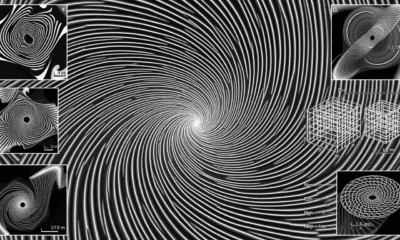

Chemistry11 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Earth & Climate11 months ago

Earth & Climate11 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

Agriculture and Food11 months ago

Agriculture and Food11 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions12 months ago

Diseases and Conditions12 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention