While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computational Biology

“Revolutionizing Sleep Analysis: New AI Model Analyzes Full Night of Sleep with High Accuracy”

Researchers have developed a powerful AI tool, built on the same transformer architecture used by large language models like ChatGPT, to process an entire night’s sleep. To date, it is one of the largest studies, analyzing 1,011,192 hours of sleep. The model, called patch foundational transformer for sleep (PFTSleep), analyzes brain waves, muscle activity, heart rate, and breathing patterns to classify sleep stages more effectively than traditional methods, streamlining sleep analysis, reducing variability, and supporting future clinical tools to detect sleep disorders and other health risks.

Computational Biology

A Quantum Leap Forward – New Amplifier Boosts Efficiency of Quantum Computers 10x

Chalmers engineers built a pulse-driven qubit amplifier that’s ten times more efficient, stays cool, and safeguards quantum states—key for bigger, better quantum machines.

Communications

Artificial Intelligence Isn’t Hurting Workers—It Might Be Helping

Despite widespread fears, early research suggests AI might actually be improving some aspects of work life. A major new study examining 20 years of worker data in Germany found no signs that AI exposure is hurting job satisfaction or mental health. In fact, there s evidence that it may be subtly improving physical health especially for workers without college degrees by reducing physically demanding tasks. However, researchers caution that it s still early days.

Artificial Intelligence

Transistors Get a Boost: Scientists Develop New, More Efficient Material

Shrinking silicon transistors have reached their physical limits, but a team from the University of Tokyo is rewriting the rules. They’ve created a cutting-edge transistor using gallium-doped indium oxide with a novel “gate-all-around” structure. By precisely engineering the material’s atomic structure, the new device achieves remarkable electron mobility and stability. This breakthrough could fuel faster, more reliable electronics powering future technologies from AI to big data systems.

-

Detectors2 months ago

Detectors2 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate4 months ago

Earth & Climate4 months agoRetiring Abroad Can Be Lonely Business

-

Cancer3 months ago

Cancer3 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Agriculture and Food3 months ago

Agriculture and Food3 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions4 months ago

Diseases and Conditions4 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention

-

Albert Einstein4 months ago

Albert Einstein4 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Earth & Climate3 months ago

Earth & Climate3 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

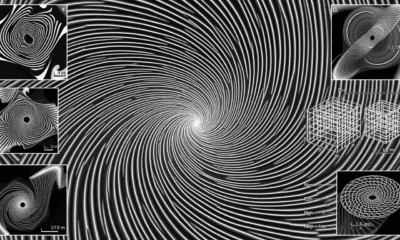

Chemistry3 months ago

Chemistry3 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”