While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Artificial Intelligence

Shedding Light on Shadow Branches: Revolutionizing Computing Efficiency in Modern Data Centers

Researchers have developed a new technique called ‘Skia’ to help computer processors better predict future instructions and improve computing performance.

Artificial Intelligence

“Revolutionizing Computing with the ‘Microwave Brain’ Chip”

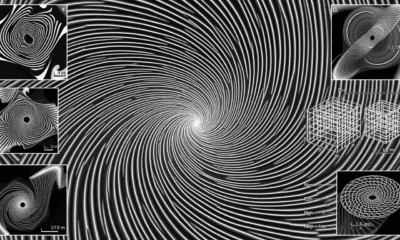

Cornell engineers have built the first fully integrated “microwave brain” — a silicon microchip that can process ultrafast data and wireless signals at the same time, while using less than 200 milliwatts of power. Instead of digital steps, it uses analog microwave physics for real-time computations like radar tracking, signal decoding, and anomaly detection. This unique neural network design bypasses traditional processing bottlenecks, achieving high accuracy without the extra circuitry or energy demands of digital systems.

Artificial Intelligence

“Tiny ‘talking’ robots form shape-shifting swarms that heal themselves”

Scientists have designed swarms of microscopic robots that communicate and coordinate using sound waves, much like bees or birds. These self-organizing micromachines can adapt to their surroundings, reform if damaged, and potentially undertake complex tasks such as cleaning polluted areas, delivering targeted medical treatments, or exploring hazardous environments.

Artificial Intelligence

Accelerating Evolution: The Power of T7-ORACLE in Protein Engineering

Researchers at Scripps have created T7-ORACLE, a powerful new tool that speeds up evolution, allowing scientists to design and improve proteins thousands of times faster than nature. Using engineered bacteria and a modified viral replication system, this method can create new protein versions in days instead of months. In tests, it quickly produced enzymes that could survive extreme doses of antibiotics, showing how it could help develop better medicines, cancer treatments, and other breakthroughs far more quickly than ever before.

-

Detectors11 months ago

Detectors11 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate12 months ago

Earth & Climate12 months agoRetiring Abroad Can Be Lonely Business

-

Cancer11 months ago

Cancer11 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Albert Einstein12 months ago

Albert Einstein12 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Chemistry11 months ago

Chemistry11 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Earth & Climate11 months ago

Earth & Climate11 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

Agriculture and Food11 months ago

Agriculture and Food11 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions12 months ago

Diseases and Conditions12 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention