While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computers & Math

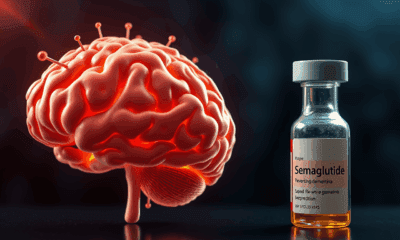

The Eye-Brain Connection: How Our Thoughts Shape What We See

A new study by biomedical engineers and neuroscientists shows that the brain’s visual regions play an active role in making sense of information.

Computers & Math

Quantum Computers Just Beat Classical Ones – Exponentially and Unconditionally

A research team has achieved the holy grail of quantum computing: an exponential speedup that’s unconditional. By using clever error correction and IBM’s powerful 127-qubit processors, they tackled a variation of Simon’s problem, showing quantum machines are now breaking free from classical limitations, for real.

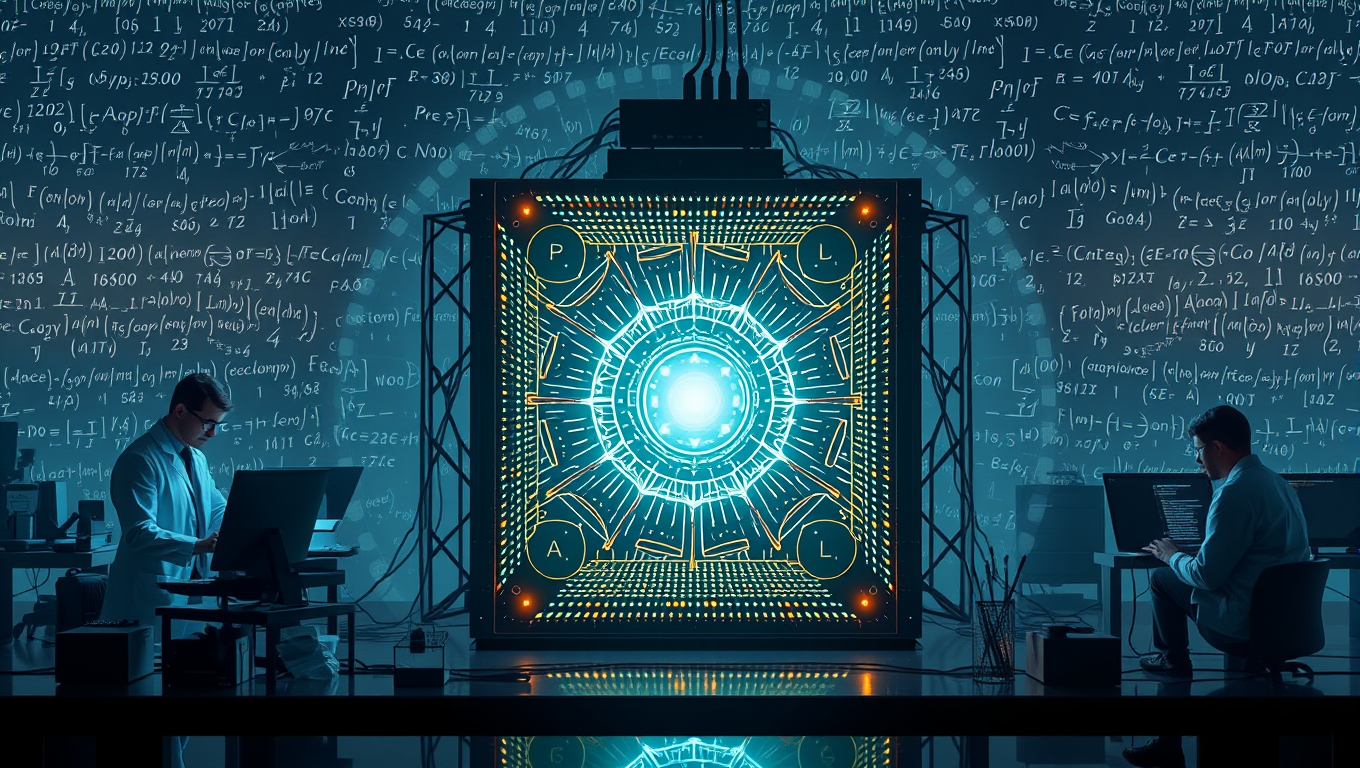

Computational Biology

A Quantum Leap Forward – New Amplifier Boosts Efficiency of Quantum Computers 10x

Chalmers engineers built a pulse-driven qubit amplifier that’s ten times more efficient, stays cool, and safeguards quantum states—key for bigger, better quantum machines.

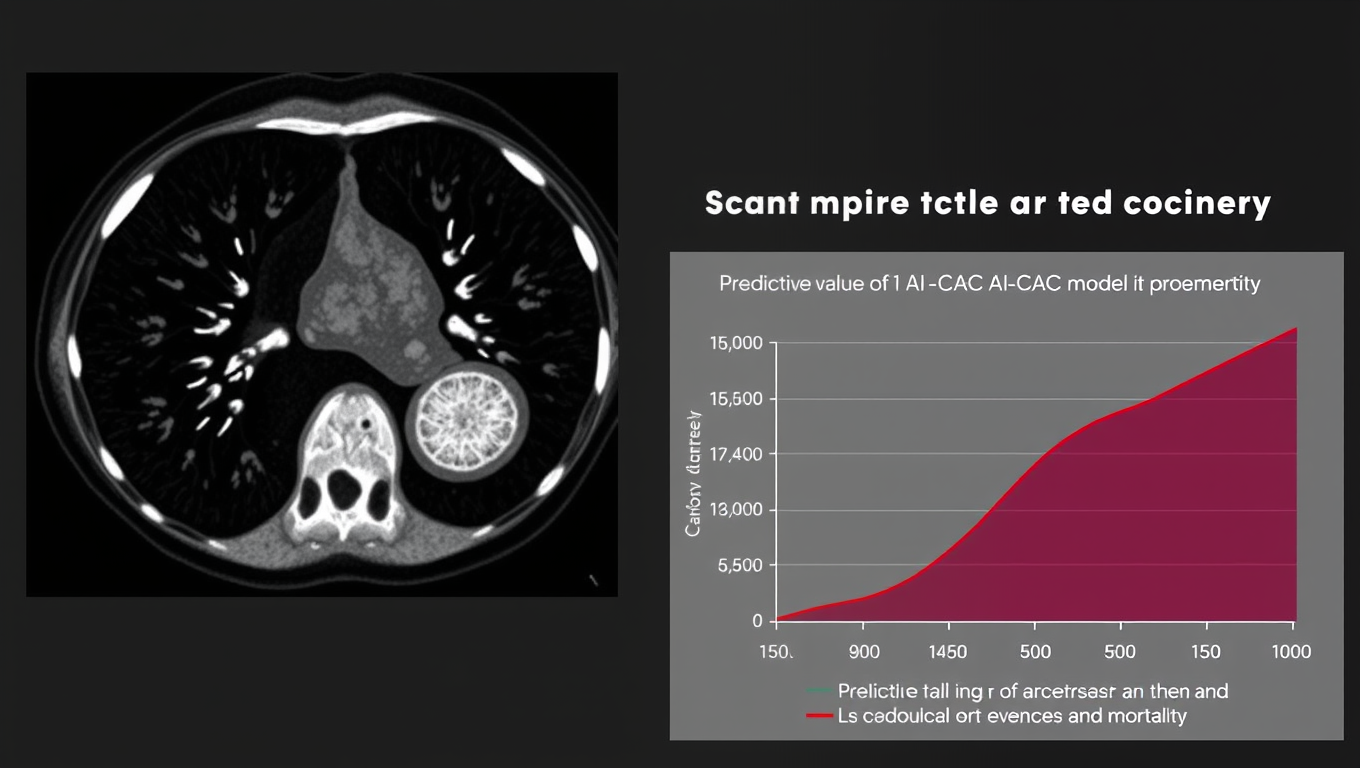

Artificial Intelligence

AI Uncovers Hidden Heart Risks in CT Scans: A Game-Changer for Cardiovascular Care

What if your old chest scans—taken years ago for something unrelated—held a secret warning about your heart? A new AI tool called AI-CAC, developed by Mass General Brigham and the VA, can now comb through routine CT scans to detect hidden signs of heart disease before symptoms strike.

-

Detectors3 months ago

Detectors3 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate4 months ago

Earth & Climate4 months agoRetiring Abroad Can Be Lonely Business

-

Cancer3 months ago

Cancer3 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Agriculture and Food3 months ago

Agriculture and Food3 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions4 months ago

Diseases and Conditions4 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention

-

Chemistry3 months ago

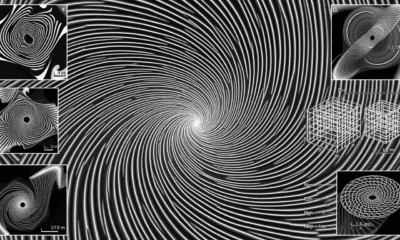

Chemistry3 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Albert Einstein4 months ago

Albert Einstein4 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Earth & Climate3 months ago

Earth & Climate3 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals