While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computer Graphics

3D Streaming Gets Leaner: Predicting Visible Content for Immersive Experiences

A new approach to streaming technology may significantly improve how users experience virtual reality and augmented reality environments, according to a new study. The research describes a method for directly predicting visible content in immersive 3D environments, potentially reducing bandwidth requirements by up to 7-fold while maintaining visual quality.

Computer Graphics

Cracking the Code: Scientists Breakthrough in Quantum Computing with a Single Atom

A research team has created a quantum logic gate that uses fewer qubits by encoding them with the powerful GKP error-correction code. By entangling quantum vibrations inside a single atom, they achieved a milestone that could transform how quantum computers scale.

Computer Graphics

The Quiet Threat to Trust: How Overreliance on AI Emails Can Harm Workplace Relationships

AI is now a routine part of workplace communication, with most professionals using tools like ChatGPT and Gemini. A study of over 1,000 professionals shows that while AI makes managers’ messages more polished, heavy reliance can damage trust. Employees tend to accept low-level AI help, such as grammar fixes, but become skeptical when supervisors use AI extensively, especially for personal or motivational messages. This “perception gap” can lead employees to question a manager’s sincerity, integrity, and leadership ability.

Child Development

Pain Relief Without Pills? VR Nature Scenes Activate Brain’s Healing Switch

Stepping into a virtual forest or waterfall scene through VR could be the future of pain management. A new study shows that immersive virtual nature dramatically reduces pain sensitivity almost as effectively as medication. Researchers at the University of Exeter found that the more present participants felt in these 360-degree nature experiences, the stronger the pain-relieving effects. Brain scans confirmed that immersive VR scenes activated pain-modulating pathways, revealing that our brains can be coaxed into suppressing pain by simply feeling like we re in nature.

-

Detectors10 months ago

Detectors10 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate11 months ago

Earth & Climate11 months agoRetiring Abroad Can Be Lonely Business

-

Cancer11 months ago

Cancer11 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Albert Einstein11 months ago

Albert Einstein11 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Chemistry10 months ago

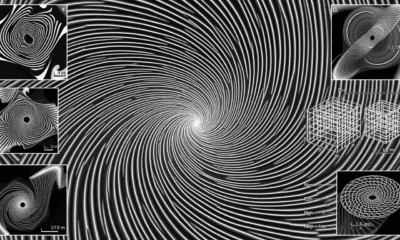

Chemistry10 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Earth & Climate10 months ago

Earth & Climate10 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

Agriculture and Food11 months ago

Agriculture and Food11 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions11 months ago

Diseases and Conditions11 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention