While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computer Programming

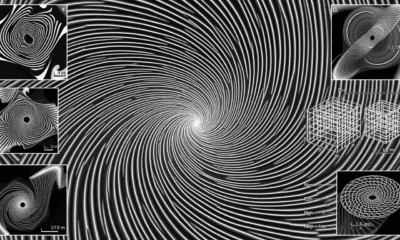

The Limits of Precision: How AI Can Help Us Reach the Edge of What Physics Allows

Scientists have uncovered how close we can get to perfect optical precision using AI, despite the physical limitations imposed by light itself. By combining physics theory with neural networks trained on distorted light patterns, they showed it’s possible to estimate object positions with nearly the highest accuracy allowed by nature. This breakthrough opens exciting new doors for applications in medical imaging, quantum tech, and materials science.

Computer Graphics

Cracking the Code: Scientists Breakthrough in Quantum Computing with a Single Atom

A research team has created a quantum logic gate that uses fewer qubits by encoding them with the powerful GKP error-correction code. By entangling quantum vibrations inside a single atom, they achieved a milestone that could transform how quantum computers scale.

Computer Graphics

The Quiet Threat to Trust: How Overreliance on AI Emails Can Harm Workplace Relationships

AI is now a routine part of workplace communication, with most professionals using tools like ChatGPT and Gemini. A study of over 1,000 professionals shows that while AI makes managers’ messages more polished, heavy reliance can damage trust. Employees tend to accept low-level AI help, such as grammar fixes, but become skeptical when supervisors use AI extensively, especially for personal or motivational messages. This “perception gap” can lead employees to question a manager’s sincerity, integrity, and leadership ability.

Computer Programming

Revolutionizing Materials Discovery: AI-Powered Lab Finds New Materials 10x Faster

A new leap in lab automation is shaking up how scientists discover materials. By switching from slow, traditional methods to real-time, dynamic chemical experiments, researchers have created a self-driving lab that collects 10 times more data, drastically accelerating progress. This new system not only saves time and resources but also paves the way for faster breakthroughs in clean energy, electronics, and sustainability—bringing us closer to a future where lab discoveries happen in days, not years.

-

Detectors10 months ago

Detectors10 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate12 months ago

Earth & Climate12 months agoRetiring Abroad Can Be Lonely Business

-

Cancer11 months ago

Cancer11 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Albert Einstein12 months ago

Albert Einstein12 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Chemistry11 months ago

Chemistry11 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Earth & Climate11 months ago

Earth & Climate11 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

Agriculture and Food11 months ago

Agriculture and Food11 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions12 months ago

Diseases and Conditions12 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention