While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Artificial Intelligence

Unlocking Digital Carpentry for Everyone

Many products in the modern world are in some way fabricated using computer numerical control (CNC) machines, which use computers to automate machine operations in manufacturing. While simple in concept, the ways to instruct these machines is in reality often complex. A team of researchers has devised a system to demonstrate how to mitigate some of this complexity.

Artificial Intelligence

Scientists Uncover the Secret to AI’s Language Understanding: A Phase Transition in Neural Networks

Neural networks first treat sentences like puzzles solved by word order, but once they read enough, a tipping point sends them diving into word meaning instead—an abrupt “phase transition” reminiscent of water flashing into steam. By revealing this hidden switch, researchers open a window into how transformer models such as ChatGPT grow smarter and hint at new ways to make them leaner, safer, and more predictable.

Artificial Intelligence

The Quantum Drumhead Revolution: A Breakthrough in Signal Transmission with Near-Perfect Efficiency

Researchers have developed an ultra-thin drumhead-like membrane that lets sound signals, or phonons, travel through it with astonishingly low loss, better than even electronic circuits. These near-lossless vibrations open the door to new ways of transferring information in systems like quantum computers or ultra-sensitive biological sensors.

Artificial Intelligence

AI Uncovers Hidden Heart Risks in CT Scans: A Game-Changer for Cardiovascular Care

What if your old chest scans—taken years ago for something unrelated—held a secret warning about your heart? A new AI tool called AI-CAC, developed by Mass General Brigham and the VA, can now comb through routine CT scans to detect hidden signs of heart disease before symptoms strike.

-

Detectors3 months ago

Detectors3 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate4 months ago

Earth & Climate4 months agoRetiring Abroad Can Be Lonely Business

-

Cancer4 months ago

Cancer4 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Agriculture and Food4 months ago

Agriculture and Food4 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions4 months ago

Diseases and Conditions4 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention

-

Chemistry4 months ago

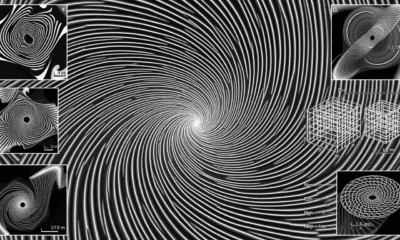

Chemistry4 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Albert Einstein4 months ago

Albert Einstein4 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Earth & Climate4 months ago

Earth & Climate4 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals