While we try to keep things accurate, this content is part of an ongoing experiment and may not always be reliable.

Please double-check important details — we’re not responsible for how the information is used.

Computational Biology

Unlocking the Code: AI-Powered Diagnosis for Drug-Resistant Infections

Scientists have developed an artificial intelligence-based method to more accurately detect antibiotic resistance in deadly bacteria such as tuberculosis and staph. The breakthrough could lead to faster and more effective treatments and help mitigate the rise of drug-resistant infections, a growing global health crisis.

Artificial Intelligence

“Tiny ‘talking’ robots form shape-shifting swarms that heal themselves”

Scientists have designed swarms of microscopic robots that communicate and coordinate using sound waves, much like bees or birds. These self-organizing micromachines can adapt to their surroundings, reform if damaged, and potentially undertake complex tasks such as cleaning polluted areas, delivering targeted medical treatments, or exploring hazardous environments.

Computational Biology

Quantum Leap Forward: Finnish Researchers Achieve Record-Breaking Qubit Coherence

Aalto University physicists in Finland have set a new benchmark in quantum computing by achieving a record-breaking millisecond coherence in a transmon qubit — nearly doubling prior limits. This development not only opens the door to far more powerful and stable quantum computations but also reduces the burden of error correction.

Computational Biology

A Quantum Leap Forward – New Amplifier Boosts Efficiency of Quantum Computers 10x

Chalmers engineers built a pulse-driven qubit amplifier that’s ten times more efficient, stays cool, and safeguards quantum states—key for bigger, better quantum machines.

-

Detectors11 months ago

Detectors11 months agoA New Horizon for Vision: How Gold Nanoparticles May Restore People’s Sight

-

Earth & Climate12 months ago

Earth & Climate12 months agoRetiring Abroad Can Be Lonely Business

-

Cancer11 months ago

Cancer11 months agoRevolutionizing Quantum Communication: Direct Connections Between Multiple Processors

-

Albert Einstein12 months ago

Albert Einstein12 months agoHarnessing Water Waves: A Breakthrough in Controlling Floating Objects

-

Chemistry11 months ago

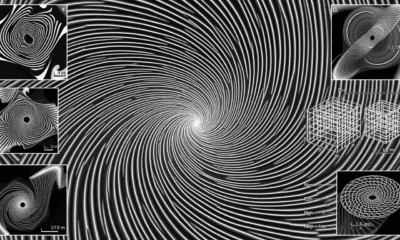

Chemistry11 months ago“Unveiling Hidden Patterns: A New Twist on Interference Phenomena”

-

Earth & Climate11 months ago

Earth & Climate11 months agoHousehold Electricity Three Times More Expensive Than Upcoming ‘Eco-Friendly’ Aviation E-Fuels, Study Reveals

-

Agriculture and Food11 months ago

Agriculture and Food11 months ago“A Sustainable Solution: Researchers Create Hybrid Cheese with 25% Pea Protein”

-

Diseases and Conditions12 months ago

Diseases and Conditions12 months agoReducing Falls Among Elderly Women with Polypharmacy through Exercise Intervention